Relevant Symposia and Workshops from NIPS 2017

- Symposium on Interpretable Machine, presented poster [webpage]

- Transparent and Interpretable ML in Safety Critical Environments workshop [webpage]

- Black in AI workshop and dinner, presented poster [webpage]

- Learning Disentangled Representations: from Perception to Control [website]

- Interpreting, Explaining and Visualizing Deep Learning [website]

- Cognitively Informed Artificial Intelligence (CIAI) workshop [website]

The Promise and Peril of Human Evaluation for Model Interpretability

Abstract

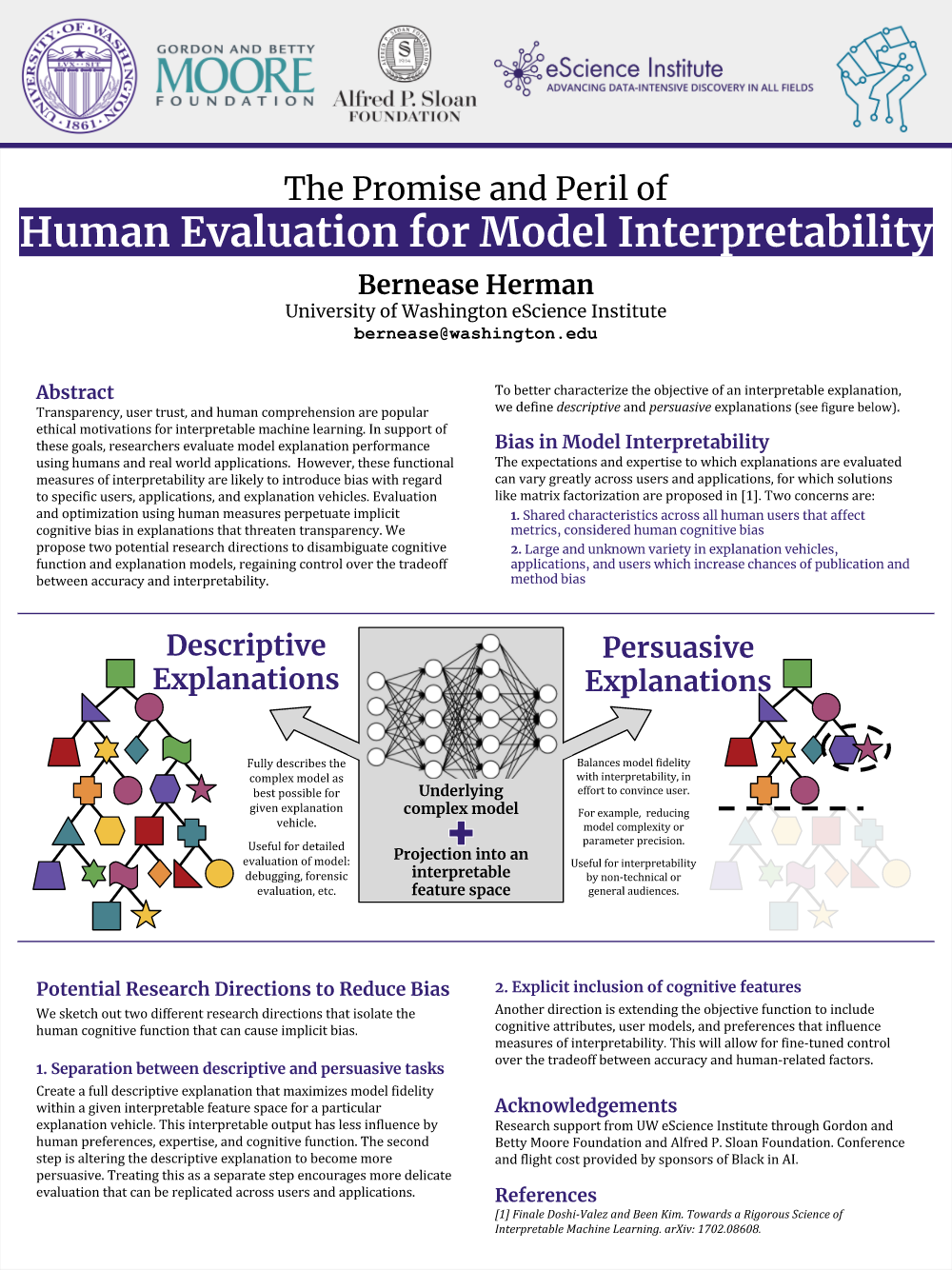

Transparency, user trust, and human comprehension are popular ethical motivations for interpretable machine learning. In support of these goals, researchers evaluate model explanation performance using humans and real world applications. However, these functional measures of interpretability are likely to introduce bias with regard to specific users, applications, and explanation vehicles. Evaluation and optimization using human measures perpetuate implicit cognitive bias in explanations that threaten transparency. We propose two potential research directions to disambiguate cognitive function and explanation models, regaining control over the tradeoff between accuracy and interpretability.

Paper

The Promise and Peril of Human Evaluation for Model Interpretability

Published on arXiv as a part of the Proceedings of NIPS 2017 Symposium on Interpretable Machine Learning

Poster

Extended poster [png]. Presented at NIPS 2017 Symposium on Interpretable Machine Learning.

Original Poster [png]. Presented at the Black In AI workshop co-located with NIPS 2017.